As part of improving GitHint.com, I've tried to implement a simple A/B testing tool using Cloudflare Workers.

The goal is to have a tool, where I can test two variants of a page and count which version leads to more actions (e.g. a click or a subscription). I could choose the better performing variant based on data, instead of guessing.

Since most of my projects are using Cloudflare as a CDN, I've decided to give Cloudflare workers a try.

This post explains how the A/B testing tool works, how it is implemented and shows a demo.

To set up an A/B test we actually need two pages and an action to track:

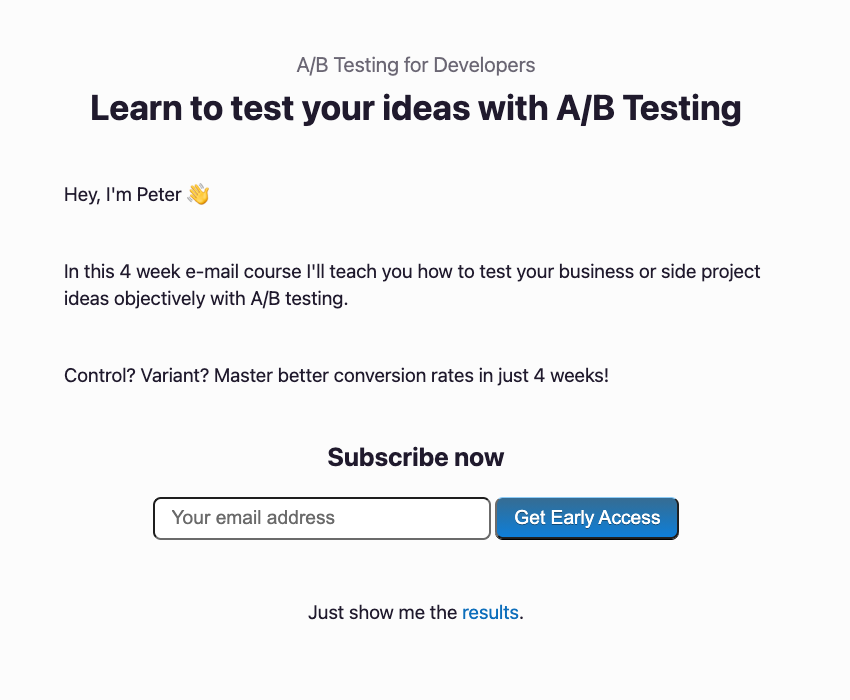

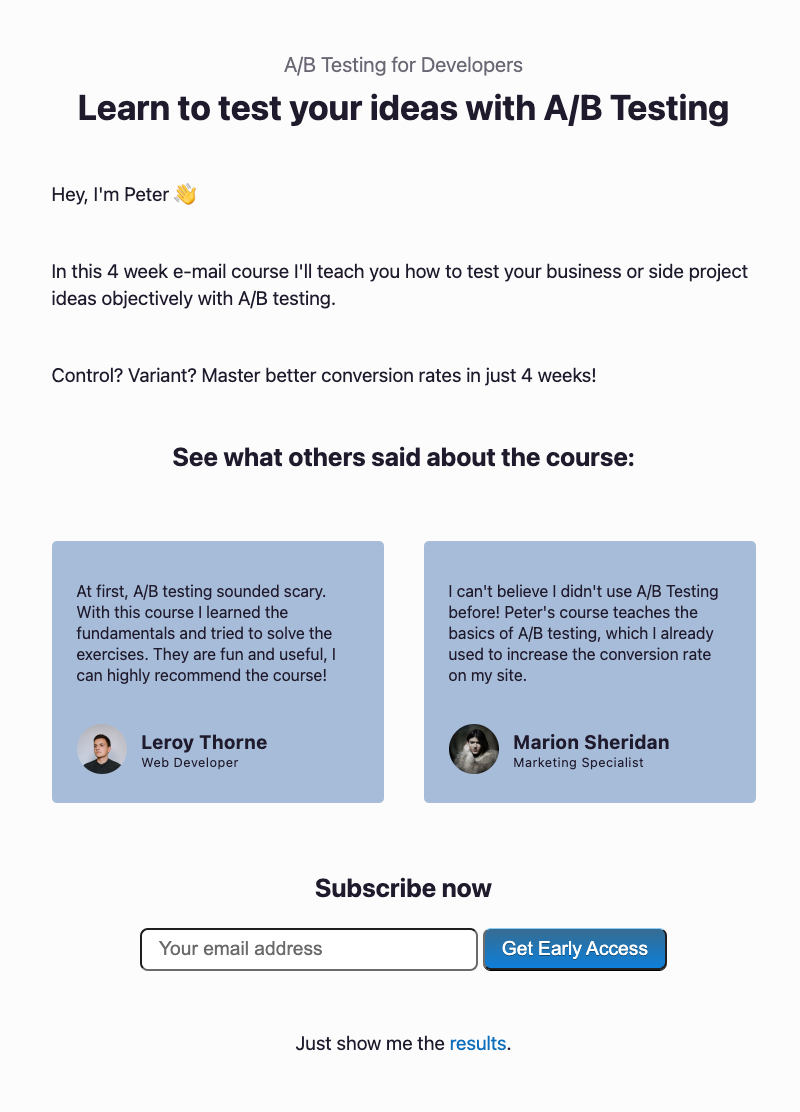

As an example we are going to try two versions of a subscription page. The control version is a page with a fake course description and a subscribe form. We test this against the same form, but this time add testimonials to the page. The hypothesis we test is, that testimonials convince more people to subscribe. The action we want to count is the form submission on both of the versions.

You can try the A/B test on ptrlaszlo.com/abtest, where you will be randomly assigned to one of the groups. We don't want to show a different version on each refresh, so the assigned group is stored as a cookie.

When your site uses Cloudflare as a CDN, you can modify a request with workers before they reach your server. This enables us everything we need for A/B testing: changing the path of the request, set cookies and count events.

The request flow looks like this:

You can find the full code on GitHub.

The code is implemented as a wrangler project. In the toml file we set which routes should be the worker intercepting:

route = "ptrlaszlo.com/abtest*"The worker code handling the requests and the durable objects used for counting are all inside index.mjs.

The A/B tested path in our case is /abtest defined with variable BASE_PATH.

Every request on this path is checked for cookies, to see if the user was already part of a group.

See how the url.pathname is changed to show the control page or the variant page, which are under /abtest/control or /abtest/variant respectively.

If the user was not assigned to a group yet, we randomly select one, increase the proper counter and show the proper page.

case BASE_PATH:

if (hasControlCookie(request)) {

url.pathname = CONTROL_PATH;

return await fetch(request);

} else if (hasVariantCookie(request)) {

url.pathname = VARIANT_PATH;

return await fetch(url, request);

} else {

const group = Math.random() < 0.5 ? "variant" : "control"

// We are only counting the page visits here, to prevent double counting page refreshes

if (group === "variant") {

url.pathname = VARIANT_PATH;

incrementCounter(COUNTER_VARIANT_SHOW, env);

} else {

url.pathname = CONTROL_PATH;

incrementCounter(COUNTER_CONTROL_SHOW, env);

}

const response = await fetch(url, request);

return setAbTestCookie(response, group);

}

break;In order to save the results we need a way to count the user actions. We will use Durable Objects, which is a fast and strongly-consistent storage provided by Cloudflare. To have access to Durable Objects from our worker, we need to provide the Durable Objects class and define it our the toml file:

[durable_objects]

bindings = [{name = "COUNTER", class_name = "Counter"}]Workers communicate with a Durable Objects through the fetch API. You can find examples on the Durable Objects developers page. For us the important part is having a unique counter for every event we want to track, visiting the pages and counting the actions taken:

const COUNTER_CONTROL_SHOW = 'control-show';

const COUNTER_CONTROL_ACTION = 'control-action';

const COUNTER_VARIANT_SHOW = 'variant-show';

const COUNTER_VARIANT_ACTION = 'variant-action';And incrementing them, when the given event happens:

await incrementCounter(COUNTER_CONTROL_ACTION, env);

async function incrementCounter(key, env) {

let id = env.COUNTER.idFromName(key);

let obj = env.COUNTER.get(id);

let resp = await obj.fetch("increment");

return await resp.text();

}To get the Durable Object values for our counters, we added an extra endpoint to our worker: /abtest/result, which returns the counter values as a JSON object:

{

"controlShow": "16",

"controlAction": "5",

"variantShow": "14",

"variantAction": "9"

}Calculating the ratios we can get what percent of the control and the variant page visits led to a subscription. The live results for our subscription A/B tests are:

Live results should appear here.

There is much more to think about when A/B testing a change. Like how long the test should run, what to test, how to divide the users into groups and checking if your results are statistically significant. These are however out of scope for this post. Let me know what you think about using workers for A/B testing!

P.S. I'm ptrlaszlo on twitter, follow me for more stories.